ICP

ICP

Definition

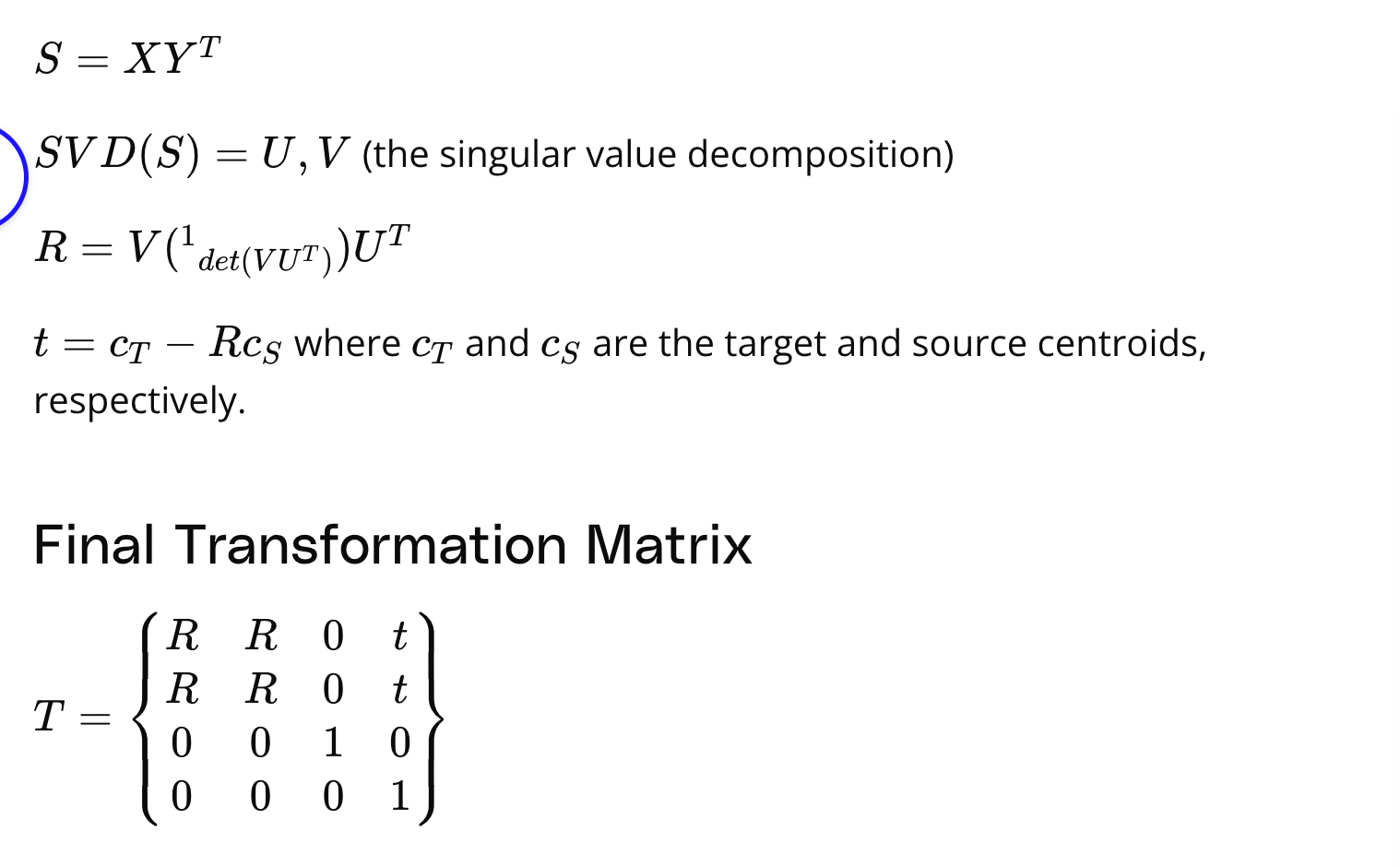

- minimize sum of distance and find the homegeneous matrix(rotation and transfer?)

Example code

- icp.cpp seems to be a source code file that contains a function named ICP, which stands for Iterative Closest Point. This function takes two PointCloudT pointers (target and source), a starting pose, and the number of iterations as input parameters. It then aligns the source point cloud with the target point cloud using the ICP algorithm and returns the transformation matrix that aligns the two point clouds.

The main function in the code excerpt seems to be creating a PCLVisualizer object and setting its background color. However, without more context, it is difficult to determine the exact role of icp.cpp in the overall project.

using namespace std;

#include <string>

#include <sstream>

#include "helper.h"

#include <pcl/registration/icp.h>

#include <pcl/console/time.h> // TicToc

Eigen::Matrix4d ICP(PointCloudT::Ptr target, PointCloudT::Ptr source, Pose startingPose, int iterations){

// initialize transform matrix as identity

Eigen::Matrix4d transformation_matrix = Eigen::Matrix4d::Identity ();

// align source with starting pose

Eigen::Matrix4d initTransform = transform2D(startingPose.theta, startingPose.position.x, startingPose.position.y);

PointCloudT::Ptr transformSource (new PointCloudT);

pcl::transformPointCloud (*source,* transformSource, initTransform);

pcl::console::TicToc time;

time.tic ();

pcl::IterativeClosestPoint<PointT, PointT> icp;

icp.setMaximumIterations (iterations);

icp.setInputSource (transformSource);

icp.setInputTarget (target);

PointCloudT::Ptr cloud_icp (new PointCloudT); // ICP output point cloud

icp.align (*cloud_icp);

if (icp.hasConverged ())

{

std::cout << "\nICP has converged, score is " << icp.getFitnessScore () << std::endl;

transformation_matrix = icp.getFinalTransformation ().cast<double>();

transformation_matrix = transformation_matrix * initTransform;

return transformation_matrix;

}

cout << "WARNING: ICP did not converge" << endl;

return transformation_matrix;

}

int main(){

pcl::visualization::PCLVisualizer::Ptr viewer (new pcl::visualization::PCLVisualizer ("2D Viewer"));

viewer->setBackgroundColor (0, 0, 0);

viewer->addCoordinateSystem (1.0);

// create a room

double lowerX = -5;

double upperX = 5;

double lowerY = -5;

double upperY = 5;

vector<LineSegment> room;

LineSegment top(0, 1, upperY, lowerX, upperX);

room.push_back(top);

LineSegment bottom(0, 1, lowerY, lowerX, upperX);

room.push_back(bottom);

LineSegment right(1, 0, upperX, lowerY, upperY);

room.push_back(right);

LineSegment left(1, 0, lowerX, lowerY, upperY);

room.push_back(left);

// create lidar

Lidar lidar(0, 0, 0, 100, 128);

PointCloudT::Ptr poses (new PointCloudT); // ground truth

PointCloudT::Ptr locator (new PointCloudT); // estimated locations

// starting location

poses->points.push_back(PointT(lidar.x, lidar.y, 0));

locator->points.push_back(PointT(lidar.x, lidar.y, 0));

// get map of room

PointCloudT::Ptr map = lidar.scan(room);

cout << "map captured " << map->points.size() << " points" << endl;

// move around the room

// Part 1. TODO: localize from single step

vector<Vect2> movement = {Vect2(0.5,pi/12)};

// Part 2. TODO: localize after several steps

if(true){ // Change to true

movement.push_back(Vect2(0.8, pi/10));

movement.push_back(Vect2(1.0, pi/6));

}

// Part 3. TODO: localize after randomly moving around the whole room

if(true){ // Change to true

srand(time(0));

for(int i = 0; i < 10; i++){

double mag = 0.5 * ((double) rand() / (RAND_MAX)) + 0.5;

double angle = pi/8 * ((double) rand() / (RAND_MAX)) + pi/8;

movement.push_back(Vect2(mag, angle));

}

}

renderPointCloud(viewer, map, "map", Color(0,0,1)); // render map

Pose location(Point(0,0), 0);

PointCloudT::Ptr scan;

int count = 0;

for( Vect2 move : movement ){

// exectue move

lidar.Move(move.mag, move.theta);

poses->points.push_back(PointT(lidar.x, lidar.y, 0));

// scan the room

scan = lidar.scan(room);

cout << "scan captured " << scan->points.size() << " points" << endl;

renderPointCloud(viewer, scan, "scan_"+to_string(count), Color(1,0,0)); // render scan

// perform localization

Eigen::Matrix4d transform = ICP(map, scan, location, 50); //TODO: make the iteration count greater than zero

Pose estimate = getPose(transform);

// TODO: save estimate location and use it as starting pose for ICP next time

location = estimate;

locator->points.push_back(PointT(estimate.position.x, estimate.position.y, 0));

// view transformed scan

PointCloudT::Ptr transformed_scan (new PointCloudT);

pcl::transformPointCloud (*scan,* transformed_scan, transform);

renderPointCloud(viewer, transformed_scan, "icp_scan_"+to_string(count), Color(0,1,0)); // render corrected scan

count++;

}

// display ground truth poses vs estimated pose

renderPointCloud(viewer, poses, "poses", Color(0,1,0), 8);

renderPath(viewer, poses, "posePath", Color(0,1,0) );

renderPointCloud(viewer, locator, "locator", Color(0,0,1), 6);

renderPath(viewer, locator, "locPath", Color(0,0,1) );

while (!viewer->wasStopped ())

{

viewer->spinOnce ();

}

return 0;

}

How to

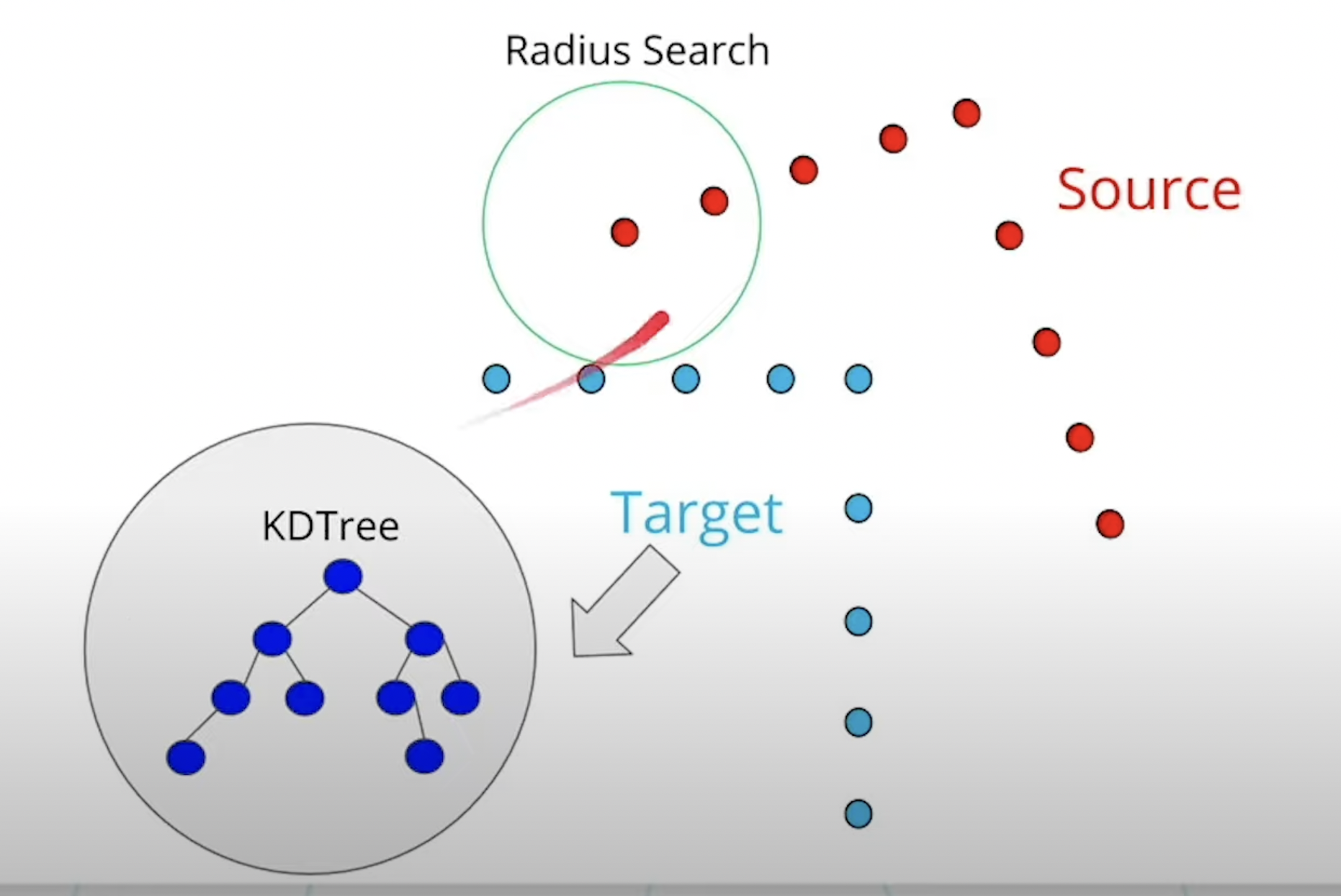

- Use target, source, KD - tree(We can do it in logarithm time instead using time O(N))

- With radius search, it can find the nearest target.

-

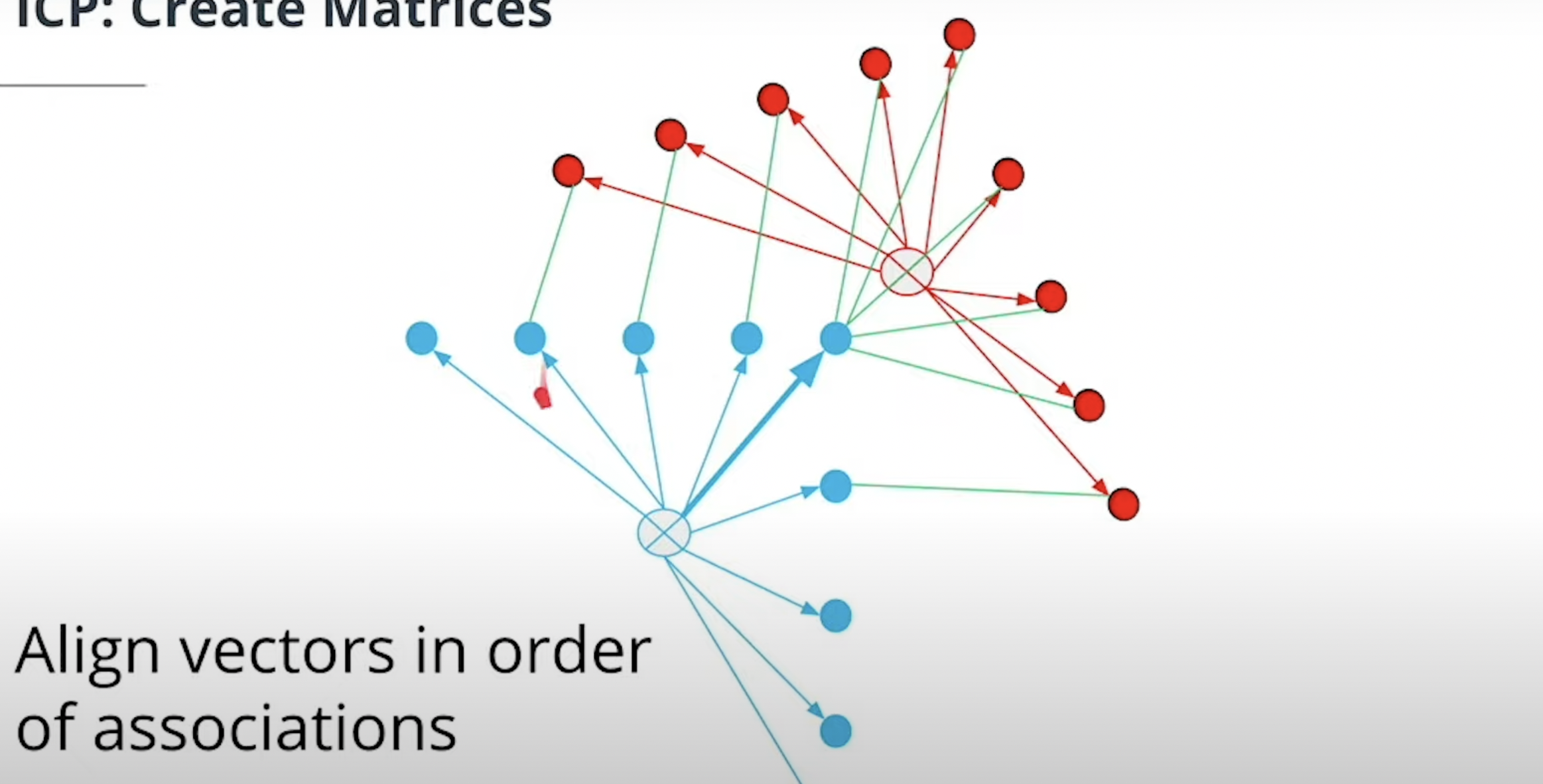

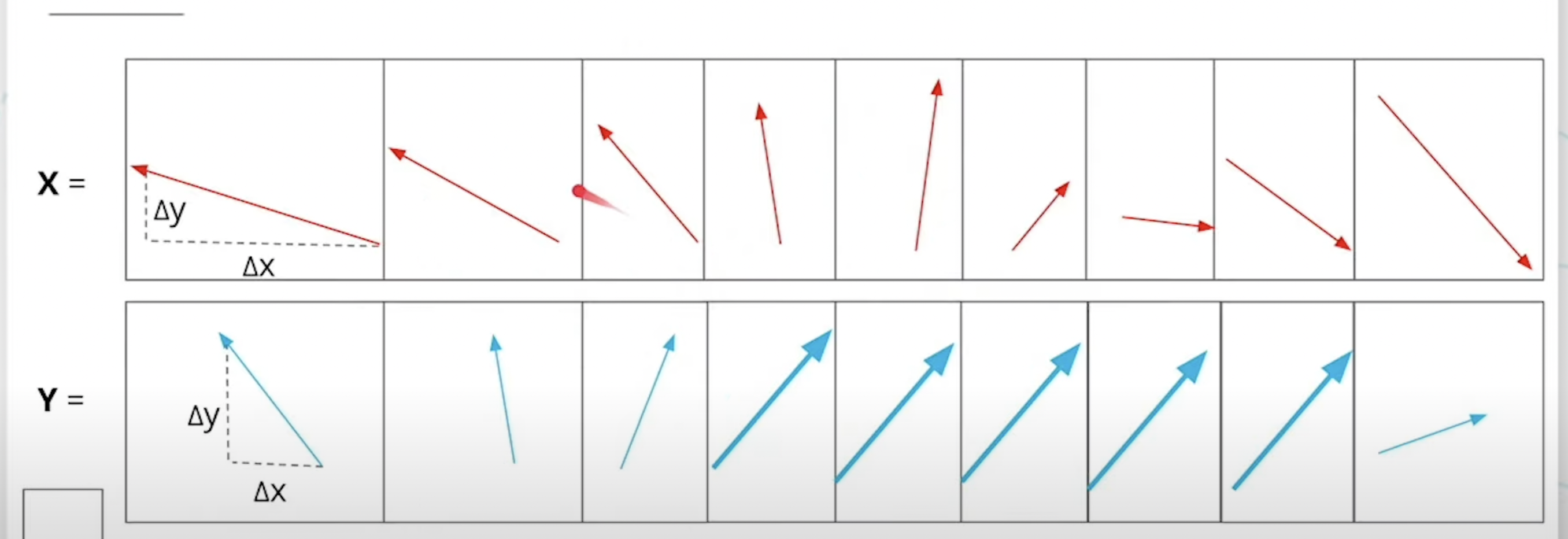

- After that you can find vector association about the nearest point and each source.

- Calcuate centroid, and make vector group of centroid and each points.

-

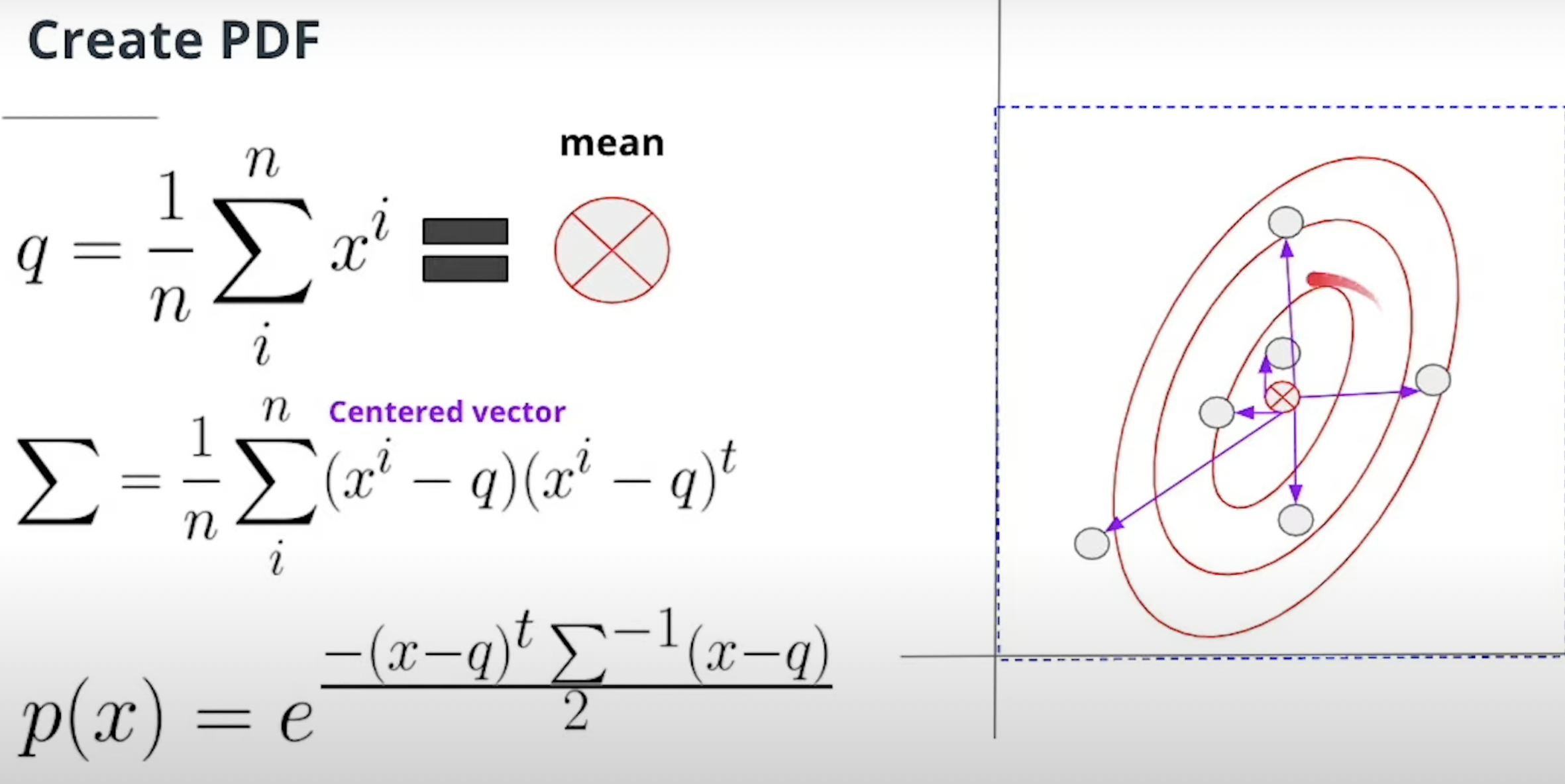

Normal distribution function

- Makes 2D gaussian graph into Probability Density function.

- I think it is the process of 2D graph into mathmatical equation.

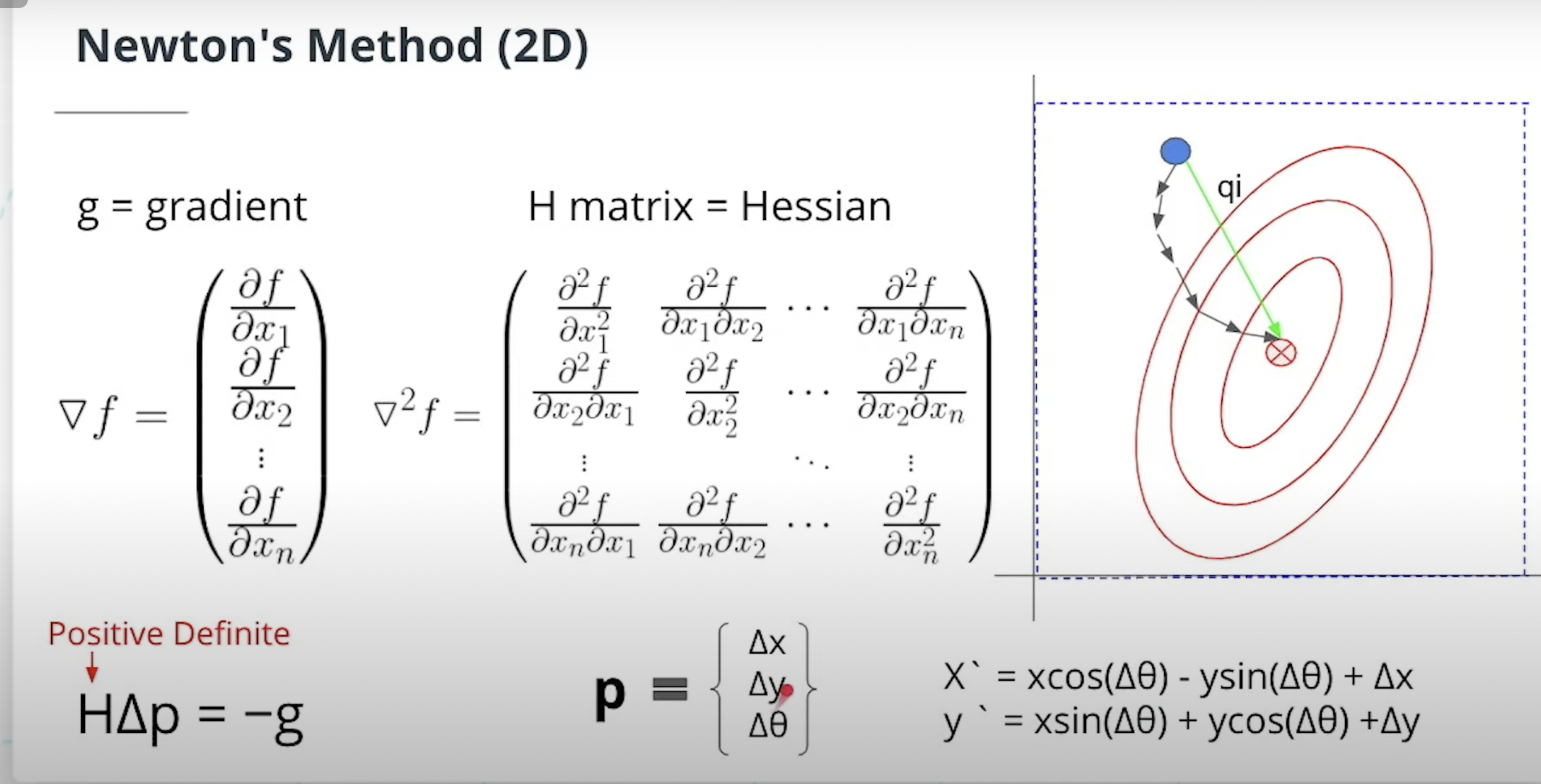

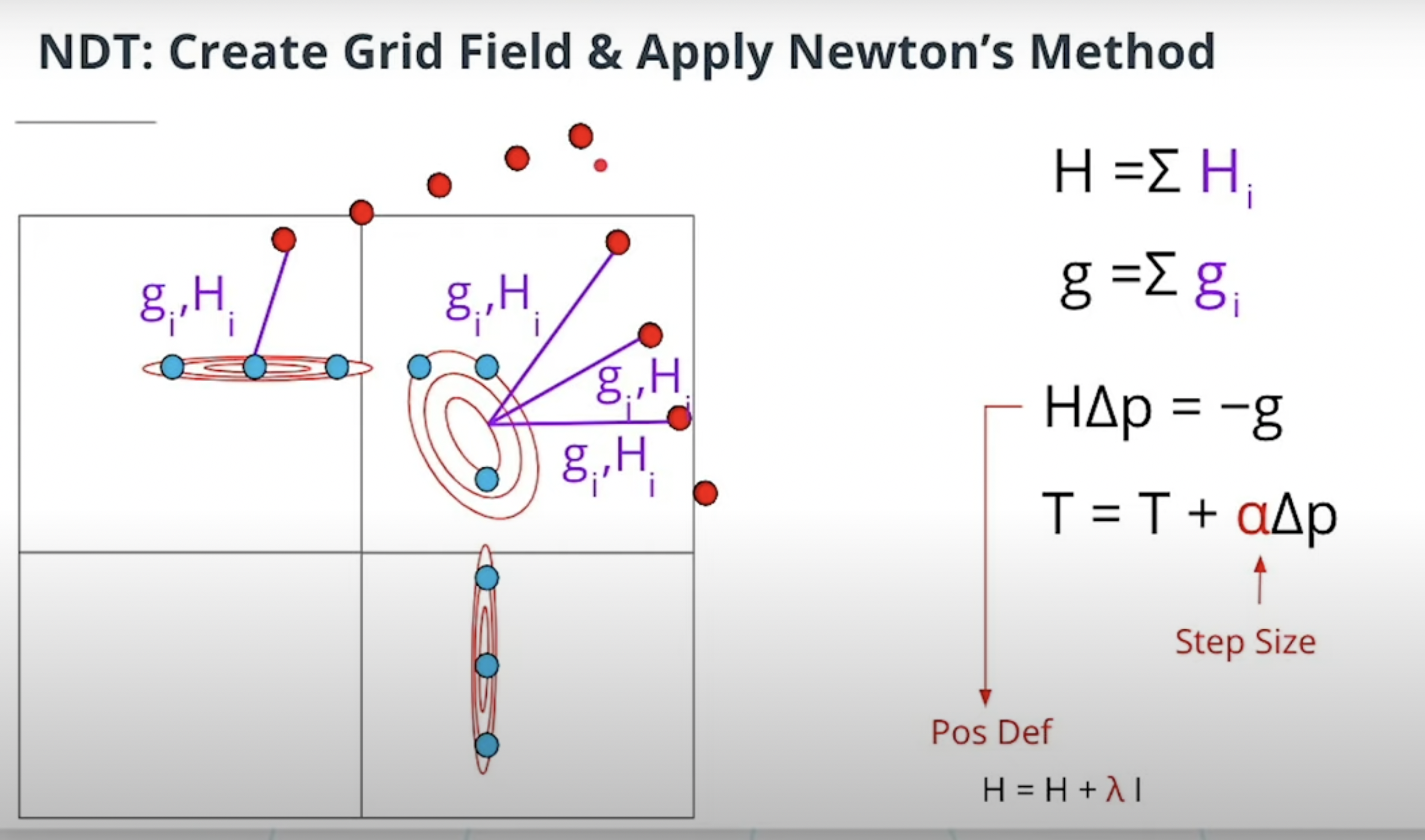

Newton’s method

- To find the peak of 2D gaussian, we’ll use newton’s method.

- Newton’s method is used to iteratively refine the estimate of the peak location by finding the root of the gradient of the Gaussian function.

- And next, I guess it will find whether function would be converged, or find minimum or maximum value.

-

- With gradient, we can find the peak of 2D gaussian.

- It gradually finds the peak value and be getting closer and closer.

- Why we use NDT and apply newton’s method? Find for what? What is the purpose?

Leave a comment